25 Things I Believe About Building Data Products

Last week I read Peter Yang's "25 Things I Believe in to Build Great Products."

His first principle? "Speed is the only moat."

I nodded. Then I stopped.

I've spent 9 years building data products in healthcare. Started at a consulting company in Nashville, worked for a massive health system, led analytics at a company scaling from Series A to exit, shipped three products solo in six months using AI tools.

Peter's framework is excellent—for most products - its awesome, honestly. But data products play by different rules so there is some nuance.

In software, the feature is the value. In data products, the data is the value. Different game entirely. You can't recreate context. You can't speed your way to a new workflow.

Here's what I actually believe. Some of this will sound obvious. Some of it contradicts what's popular. All of it comes from things I got wrong before I got them right.

Trust is Compounding

1. Trust compounds slowly and is destroyed instantly.

Morgan Housel wrote that

growth is driven by compounding, which takes time—but destruction is driven by single points of failure, which can happen in seconds.

That's data products in a sentence. You spend months building credibility. One bad number in front of the wrong executive and you're back to zero. I've seen it happen. I've caused it to happen.

2. Your data quality IS your reputation.

In SaaS, a bug is annoying. In data products, a wrong number is existential. When someone pulls a metric from your product and puts it in a board deck, your reputation is on the line. They don't remember the 47 accurate numbers. They remember the one that made them look foolish.

3. Credibility comes before features.

I used to think we needed more dashboards, more metrics, more capabilities. Wrong. What we needed was for people to trust the three dashboards we already had. Feature roadmaps don't matter if your users are double-checking your numbers in Excel.

4. Validation is not bureaucracy.

In my 20s as a data engineer/analyst, I resented data quality checks. They slowed us down. Then I shipped a cohort analysis with a join bug that inflated our numbers by 40%. The client's analyst found it before we did—in a board meeting. Now I don't ship anything without validation. It feels slow. It's actually the fastest path to trust.

5. Your users are experts — treat them that way.

Docs, nurses, seasoned healthtech sales reps, pharma leaders, and researchers know their domain better than you ever will. When they say a number looks wrong, they're usually right. I've learned more from users questioning my dashboards than from any product review. The skeptics are your best QA team.

Teams Need Operating Systems

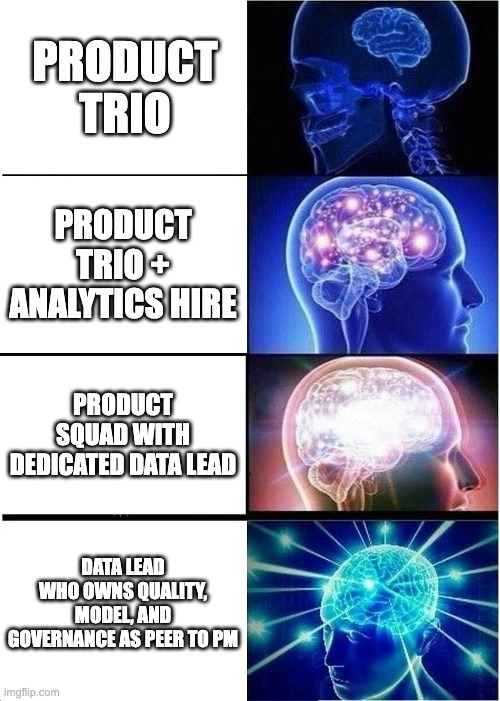

6. Data products need the Product Squad, not the Trio.

The Product Trio (PM, Tech Lead, Design Lead) works for SaaS. Data products need a fourth seat: the Data Lead. Someone who owns data quality, data model, and data governance as first-class concerns. Without this, data quality lands on everyone—which means nobody actually owns it.

Learn more here Beyond the Product Trio - Why Data Products Need a Squad

7. Each role needs its own operating system.

I spent years trying to force Scrum onto data teams. It doesn't fit. Data scientists need deep exploration time. Data engineers need uninterrupted flow. Analysts need rapid iteration. One methodology doesn't work. Each role needs workflows tuned to how they actually think. (hint hint: more to come here)

8. Handoffs are where things break.

The interesting part of team coordination isn't the ceremonies—it's the handoffs. When the PM's requirements become the engineer's spec. When the analyst's insight becomes the designer's dashboard. I once watched a three-month project fail because the handoff between analysis and engineering lost a critical assumption about how dates were formatted. One team assumed UTC. The other assumed local time. Nobody wrote it down. Make these explicit. Document what context needs to transfer. Most failures trace back to a lossy handoff.

9. Ceremonies designed for software teams suffocate data teams.

Two-week sprints fragment data work that requires deep exploration. Daily standups interrupt flow states. Sprint planning assumes you know what you'll discover. You don't. Data work is exploratory by nature. Your process needs to accommodate that.

10. The best teams ship context, not just artifacts.

A data model without documentation is a liability. A dashboard without a data dictionary is a guessing game. The artifact is only half the deliverable. The context—why it exists, what it assumes, where it breaks—is what makes it usable.

The Ground is Always Shifting

11. Data is alive, not static.

Software code does what it did yesterday. Data doesn't. Upstream systems change. Coding practices drift. New edge cases appear. What was clean last month is dirty this month. If your mental model assumes data is stable, you'll be constantly surprised.

12. Yesterday's clean data is today's mess.

I've never worked with a dataset that stayed clean. Every data product I've built eventually discovered data quality issues we didn't anticipate. Not because we were sloppy—because the world changed. A new hospital joined with different coding practices. A vendor updated their schema without telling us. A field that was always populated started coming in null from one site. We had a pipeline that ran perfectly for eight months, then broke because a single provider started entering diagnoses in a free-text field instead of using the structured codes. Stuff happens. Plan for it.

13. Build for change, not perfection.

In 2018, I tried to build "the perfect data model." Wasted four months. Now I build for change. Modular. Documented. Easy to extend. The perfect model doesn't exist because the requirements will evolve. Design for evolution, not permanence.

14. Governance is enablement, not control.

Data governance gets a bad reputation because it's usually implemented as a series of "no's." Good governance is actually a series of "yes, and here's how." It's documentation that helps people self-serve. It's guardrails that prevent mistakes without blocking progress.

15. Document like the future will question you.

Six months from now, someone will ask why a metric is calculated a certain way. If the answer lives only in your head, you've failed. Document your assumptions. Document your decisions. Document the edge cases you chose to ignore. Your future self will thank you.

Think in Value, Not Capabilities

16. Start with business impact, not data.

The biggest mistake data teams make is starting with "what data do we have?" instead of "what decision are we trying to improve?" I've built dashboards nobody used because we started with available data. Now I start with: what changes if this works?

17. Products over projects.

Projects end. Products evolve. When you treat data work as a project, you deliver something and walk away. When you treat it as a product, you own it through its lifecycle. You iterate. You respond to feedback. You improve. I once inherited a data warehouse that a previous team built as a "project" in 2019. That team was long gone by the time I arrived. Nobody understood why certain decisions were made. Nobody owned it. It was technically working but practically useless because nobody trusted it enough to use it. The product mindset is the difference between one-time value and compounding value.

18. If you wouldn't charge for it, don't build it.

I ask myself this question constantly: "If we had to charge our internal stakeholders for this, would they pay?" It's a brutal filter. Cuts through a lot of "nice to have" requests. If the answer is no, maybe we shouldn't build it.

19. Reusability is a design constraint, not an afterthought.

Single-use data products are expensive to maintain and hard to justify. When I design something now, I ask: "Can this serve three use cases, not just one?" Sometimes the answer is no. But asking the question changes how you design.

20. Outcome-driven beats deliverable-driven.

I used to measure success by what we shipped. Dashboards delivered. Pipelines built. Models deployed. Wrong metric. Now I measure by outcomes changed. Decisions improved. Time saved. Revenue impacted. Shipping is easy to count. Impact is what matters.

Domain Expertise is the Moat

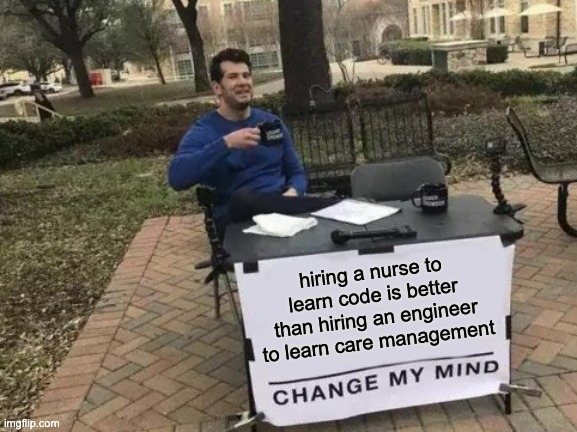

21. A nurse who can code beats a coder who doesn't understand nursing.

I've hired both. The domain expert who learned technical skills consistently outperforms the technical expert who tries to learn the domain.

(Actually, let me be more precise: consistently outperforms in the first two years. After that, the gap narrows. But most data products don't have two years to wait.)

Healthcare is complicated. Coding practices vary by system. Clinical workflows have nuance. We once had a data scientist with impeccable credentials build a model to predict patient readmissions. Technically flawless. But they'd included "arrival by ambulance" as a feature, which correlated strongly with readmissions. A nurse on the team pointed out the obvious: sick people arrive by ambulance. We weren't predicting readmission risk—we were predicting how sick someone was when they arrived. You can teach SQL. You can't quickly teach 20 years of clinical experience.

22. The business can't be Googled.

Technical problems have Stack Overflow answers. Domain problems don't. Understanding why a certain ICD-10 code is used differently across health systems isn't documented anywhere. This knowledge lives in people—clinicians, analysts, operational staff. Cultivate these relationships.

23. Healthcare doesn't work like tech.

Moves slower. Regulations constrain everything. Stakeholders are risk-averse for good reasons—mistakes hurt patients. I've watched people from consumer tech companies try to bring "move fast and break things" to healthcare. It doesn't work. The culture is different. The constraints are different. Adapt.

24. Regulated context changes everything.

HIPAA isn't just a compliance checkbox. It fundamentally shapes what you can build, how you can build it, and who can access it. I've seen beautiful product ideas die because they required data sharing that wasn't legally possible. Know your constraints before you start designing.

25. Your users know more than you—learn from them.

Healthcare analysts have spent years understanding their data, their systems, their edge cases. I approach every user conversation assuming they know something I don't. Because they do. The best product improvements I've made came from users who told me I was wrong.

These are lessons, not rules. Your context is different. Healthcare is different from fintech is different from e-commerce. Data products serving researchers are different from those serving operations.

But if I had to bet, I'd bet on trust over speed. On domain expertise over technical flash. On products that evolve over projects that end.

What do you believe about building data products that the industry hasn't figured out yet?

That's probably worth writing down. (I'm still figuring some of mine out.)